Scientific writing

I enjoy writing, particularly explaining maths and science in an easy to understand way. Here are various samples of my writing.

Deep reinforcement learning for quantum control

There are three main subfields in modern machine learning: supervised learn- ing, unsupervised learning, and reinforcement learning. Supervised learning can be summarized as training a neural network to learn a function that maps input data to some output. The function is inferred by comparing 1the current output of the network to the desired output and updating the network parameters. Unsupervised learning is used to find commonalities in the input data, and is used to group, classify or categorize unlabeled data. Reinforcement learning differs from supervised and unsupervised learning and is based on letting an actor learn how to behave in a desired way by taking actions in an environment and observing the effect of the action on the environment. In order to define the ”optimal” behavior of the agent, we give it feedback in the form of a reward based on the effect of its previous action.

In this article, I give a thorough introduction to Deep Q-Learning, the first deep reinforcement learning algorithm that was proposed (2014). Then I show how it can be applied to perform state transfer on a two-state quantum system.

Is information physical?

In 1961 Rolf Landauer proposed a solution to an old thought-experiment, the Maxwell’s demon, by declaring that information is physical and that to delete one bit of information some physical work is needed. His main argument was that information is inevitably inscribed in a physical medium, e.g. the charge of an electron, the position of a particle, its mass, etc. Information was not an abstract entity but exists only through a physical representation. This idea has become very popular in physics, and recently in popular science communication.

However, the thought is quite different from what the public normally think of as information: words in a book, an address or even something as abstract as the mood of a person in a given day. It is not quite clear what is meant by the physical nature of information, nor its significance as a useful concept to think about the world. However the idea also has critics, arguing that it does not bring anything new to the table, and that in the end all we have is energy and matter, what we choose to call it does not matter. In this essay I cover these points in detail and discuss its relation to two theories of truth in epistemology; correspondence and coherence theories.

Entropy production and information processing in stochastic thermodynamics: Optimization, measurement, and erasure

This is my Ph.D. thesis. It is an article based thesis, where I introduce the key consepts needed to understand the accompanying papers. I have made effort to make it understandable for anyone with a bachelor in physics or math, if read from the beginning.

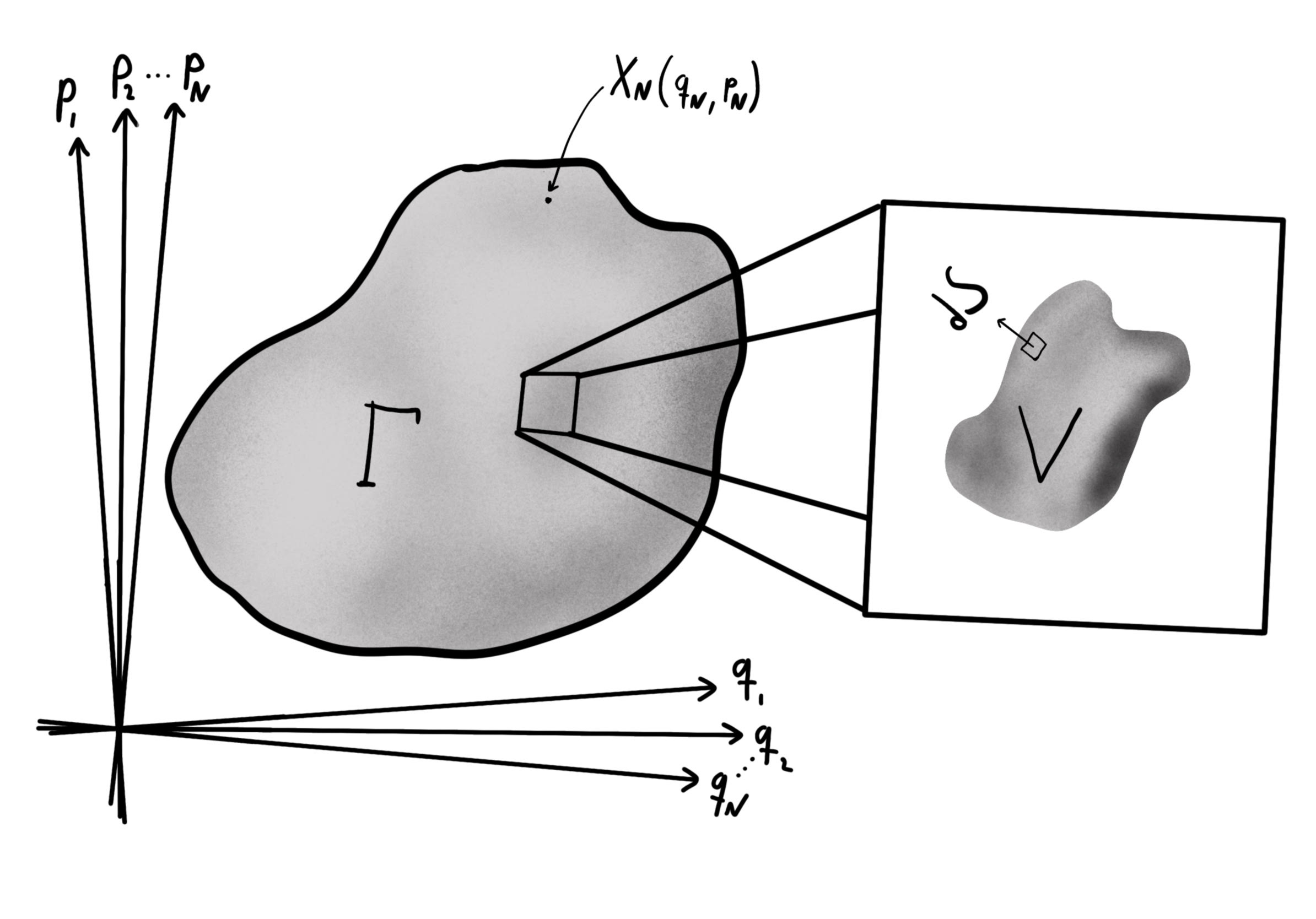

Concise introduction to Statistical Mechanics

In this document, I give an introduction to the foundation of statistical mechanics, based on phase-space analysis and ensemble theory. The subject is dense for beginners, but if you can read and understand this document you have a good understanding of all of equilibrium statistical physics. Good luck!

Connection between information theory and statistical mechanics

Entropy is a concept introduced in the 18th century to describe the efficiency of heat engines. Shannon information was derived in 1948, and describe the efficency of information transport and singnal processing. These two concepts turned out to be almost exactly the same. This observation points to a deep connection between physics and information theory.